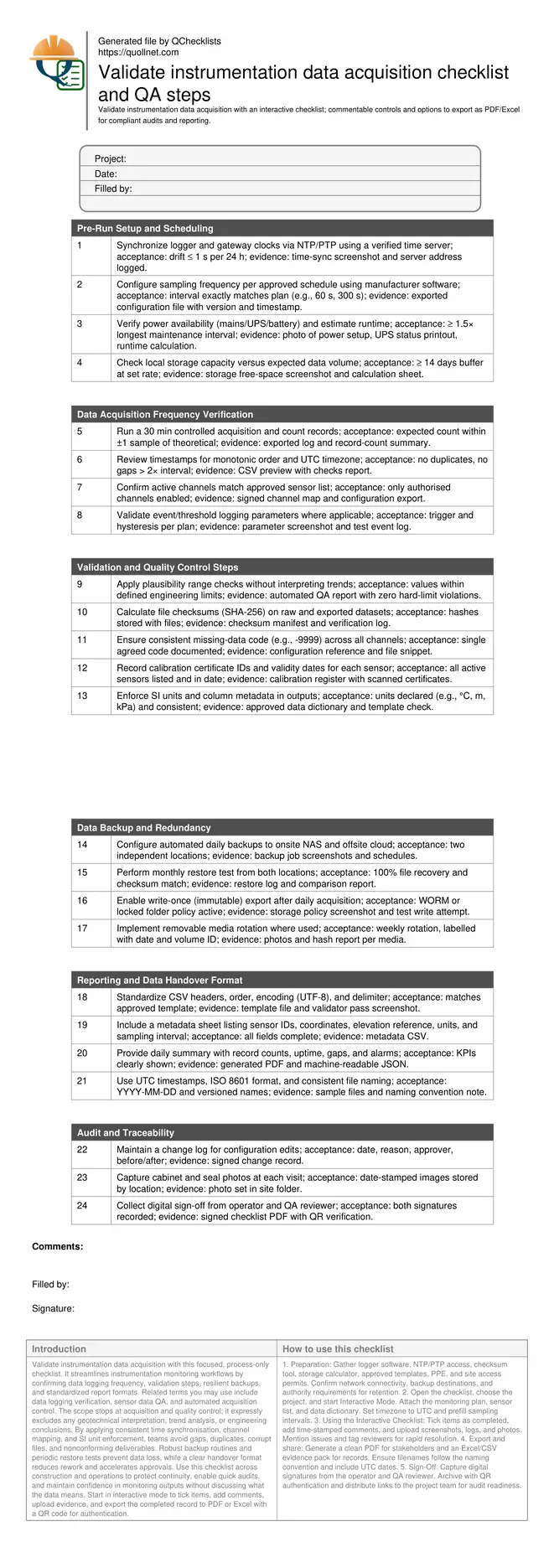

Validate Instrumentation Data Acquisition: Frequency, Validation, Backup, Reporting

Definition: Validate instrumentation data acquisition for construction monitoring teams by verifying logging frequency, automated validation checks, redundant backups, and standardized reporting, excluding any geotechnical interpretation of the data.

- Confirm sampling frequency, time sync, and channel configuration match plan.

- Run structured validation checks to catch gaps, duplicates, and unit errors.

- Implement redundant backups with restore tests for assured data continuity.

- Interactive, commentable, export, QR code for audit readiness.

Validate instrumentation data acquisition with this focused, process-only checklist. It streamlines instrumentation monitoring workflows by confirming data logging frequency, validation steps, resilient backups, and standardized report formats. Related terms you may use include data logging verification, sensor data QA, and automated acquisition control. The scope stops at acquisition and quality control; it expressly excludes any geotechnical interpretation, trend analysis, or engineering conclusions. By applying consistent time synchronisation, channel mapping, and SI unit enforcement, teams avoid gaps, duplicates, corrupt files, and nonconforming deliverables. Robust backup routines and periodic restore tests prevent data loss, while a clear handover format reduces rework and accelerates approvals. Use this checklist across construction and operations to protect continuity, enable quick audits, and maintain confidence in monitoring outputs without discussing what the data means. Start in interactive mode to tick items, add comments, upload evidence, and export the completed record to PDF or Excel with a QR code for authentication.

- This checklist targets the acquisition layer only: it verifies logging frequency, time synchronisation, channel activation, and SI unit consistency. It prevents gaps and duplicates through structured tests, then documents proofs with screenshots, exported logs, and signatures for traceable compliance.

- Built-in validation steps flag incorrect headers, missing metadata, unsupported encodings, and checksum mismatches before data release. Teams confirm calibration references and consistent missing-data codes, ensuring downstream users receive clean, machine-readable files without manual fixes or assumptions.

- Resilient backup routines use two independent locations and routine restore tests, minimizing downtime and loss risk. Standardised CSV/JSON outputs, UTC timestamps, and clear file naming support automated ingestion pipelines and multi-stakeholder reporting with minimal friction during reviews and audits.

- Interactive online checklist with tick, comment, and export features secured by QR code.

Pre-Run Setup and Scheduling

Data Acquisition Frequency Verification

Validation and Quality Control Steps

Data Backup and Redundancy

Reporting and Data Handover Format

Audit and Traceability

Control acquisition frequency and time synchronisation

Getting frequency right starts with precise timekeeping. Synchronise logger clocks via NTP or PTP to limit drift and avoid duplicate or missing records during merges. Configure sampling intervals exactly per the monitoring plan, then prove performance with a short controlled run that counts expected versus actual records. Review timestamps for monotonic order, single timezone (UTC), and the absence of gaps larger than twice the configured interval. Lock approved channels and disable unused inputs to prevent stray columns in outputs. Finally, validate power and storage headroom so data does not stop during weekends or bad weather. These steps protect downstream processing pipelines and prevent retroactive fixes. The goal is clean, predictable time series at the source without discussing what the data means—only how it is acquired, stored, and handed over for others to interpret.

- Use NTP/PTP; target drift ≤ 1 s per 24 h.

- Test-run 30 min; record count within ±1 sample.

- Timestamps in UTC, ISO 8601, monotonic order only.

- Disable unauthorised channels to reduce spurious columns.

Structured validation without interpretation

Validation confirms data quality without inferring behaviour. Apply plausibility ranges and missing-data codes consistently, ensuring values sit within predefined engineering limits and that gaps are clearly marked. Compute checksums on raw and exported files to prove integrity end-to-end. Enforce SI units and consistent headers so machine workflows do not break. Track calibration certificates and link their IDs to channel metadata for traceability. These checks surface format and continuity issues early, before they contaminate reports. Avoid commenting on trends or causes; limit entries to pass/fail evidence like screenshots, manifests, and signed records. When a validation fails, document the defect with location, time, responsible party, and corrective action taken within the acquisition chain.

- Apply range checks; zero hard-limit violations allowed.

- Use a single missing-data code across all channels.

- Store SHA-256 hashes with datasets for integrity.

- Reference calibration IDs and expiry dates.

Backups, restore tests, and standardised reporting

Backups only count when restores work. Use two independent locations—onsite NAS and offsite cloud—and schedule daily jobs with monthly restore tests to confirm full recovery and matching checksums. Lock exports using write-once settings or retention rules to prevent accidental edits. For reporting, publish CSV with UTF-8 encoding and standardised headers, plus a metadata sheet covering IDs, coordinates, units, and sampling interval. Provide summary KPIs (counts, uptime, gaps) in both PDF and JSON to serve humans and machines. Adopt UTC timestamps and clear naming conventions to keep pipelines deterministic. Retention should follow approved project specifications and authority requirements, documented with owners and durations.

- Keep two copies; test restores monthly.

- Enable write-once or immutable folders for exports.

- Deliver CSV + JSON; include a complete metadata sheet.

- Use UTC and versioned, predictable file names.

How to use this interactive acquisition validation checklist

- Preparation: Gather logger software, NTP/PTP access, checksum tool, storage calculator, approved templates, PPE, and site access permits. Confirm network connectivity, backup destinations, and authority requirements for retention.

- Open the checklist, choose the project, and start Interactive Mode. Attach the monitoring plan, sensor list, and data dictionary. Set timezone to UTC and prefill sampling intervals.

- Using the Interactive Checklist: Tick items as completed, add time-stamped comments, and upload screenshots, logs, and photos. Mention issues and tag reviewers for rapid resolution.

- Export and share: Generate a clean PDF for stakeholders and an Excel/CSV evidence pack for records. Ensure filenames follow the naming convention and include UTC dates.

- Sign-Off: Capture digital signatures from the operator and QA reviewer. Archive with QR authentication and distribute links to the project team for audit readiness.

Call to Action

- Start Checklist Tick off tasks, leave comments on items or the whole form, and export your completed report to PDF or Excel—with a built-in QR code for authenticity.

- Download Excel - Instrumentation Data Acquisition Validation

- Download PDF - Instrumentation Data Acquisition Validation

- View Image - Instrumentation Data Acquisition Validation